In recent years, AI intelligence has been widely used in life, from the fields of technology, engineering to economics, society, etc. Unstable Diffusion is AI software that allows you to create living images that move by command. Let’s learn about this tool with Coincu through the article Unstable Diffusion Review.

What is Unstable Diffusion?

Unstable Diffusion AI is a text-to-image software, to the public in 2022. This innovative tool allows users to generate highly detailed images based on textual descriptions, ushering in a new era of creative possibilities.

Unlike some other AI image generators, Unstable Diffusion may lack a sleek user interface, but it compensates by being completely free to use on personal computers. Moreover, Unstable Diffusion extends its capabilities beyond text-to-image conversion. It excels in image-to-image translation, inpainting, and outpainting, broadening its utility across various tasks in digital artistry and design.

Users can leverage Unstable Diffusion not only for image creation but also for crafting videos and animations. As a result, the software can efficiently operate on standard desktops or laptops equipped with a GPU, making high-quality image generation accessible to a broader audience.

Furthermore, Unstable Diffusion offers a unique advantage with its ability to be fine-tuned through transfer learning. With just five images, users can tailor the model to suit their specific requirements, enhancing its adaptability and performance. The tool is available to all license holders, distinguishing it from previous models in its class.

Read more: Worldcoin Review: The New Project Expected To Boom In 2024

How Unstable Diffusion works

Unlike traditional diffusion models that employ Gaussian noise for image encoding, Stability Diffusion AI utilizes a machine-learning framework. Operating within a diffusion model framework, Unstable Diffusion excels at generating images ex nihilo while incorporating latent structures during training to minimize distortions.

A notable departure from other diffusion models lies in Unstable Diffusion’s avoidance of pixel space in image representation. Instead, it harnesses an implicit space, which intentionally reduces image sharpness.

For instance, a color image with a modest resolution of 512×512 entails a staggering 786,432 values. In contrast, Unstable Diffusion employs a compressed image format that is 48 times smaller, containing just 16,384 values. This significant reduction in data volume translates to more manageable computational requirements.

Remarkably, Unstable Diffusion can seamlessly operate on desktop setups equipped with an NVIDIA GPU boasting a mere 8 GB of RAM. Its efficacy extends even to confined environments, as its reliance on natural appearance rather than randomness ensures consistent performance. To achieve intricate details such as facial features, Unstable Diffusion leverages variable autoencoding (VAE) techniques within its decoder architecture.

The development of Unstable Diffusion V1 represents a milestone in AI imaging advancement. Trained on three meticulously curated datasets, including the LAION-Aesthetics v2.6 collection, compiled by LAION via Common Crawl, this version demonstrates a commitment to quality and aesthetic refinement. Notably, the dataset comprises images rated with aesthetic scores of 6 or higher, underscoring Unstable Diffusion’s focus on producing visually pleasing outcomes.

Unstable Diffusion Architectures

Variational Autoencoder: Encoding Complexity

At the heart of Unstable Diffusion lies the variational autoencoder, a sophisticated apparatus comprising an encoder and decoder. The encoder meticulously compresses a 512×512 pixel image into a more manageable 64×64 model, seamlessly navigating through an implicit space for manipulation.

Meanwhile, the decoder adeptly reconstructs the model, restoring it to its original dimensions, ensuring fidelity to the original image.

Forward Diffusion: Unraveling Complexity

Forward diffusion, an integral facet of Unstable Diffusion, progressively introduces Gaussian noise into images until only random noise pervades the final output. This transformation renders the original image indistinguishable, a crucial step during training, although its utility extends primarily to image-to-image conversion scenarios.

Reversed Diffusion: Unveiling Originality

In contrast, reversed diffusion serves as a mechanism to unravel the effects of forward diffusion, essentially retracing its steps. By parameterizing this process, the model can seamlessly revert images to their pristine forms. Whether trained on a diverse array of images or guided by specific prompts, reversed diffusion ensures the fidelity of the final output.

Noise Prediction Engine (U-Net): Filtering Distortions

Central to the denoising process within Stability Diffusion AI is the implementation of the U-Net model, renowned for its prowess in image segmentation in biomedicine. Leveraging the Residual Neural Network (ResNet) architecture, the noise prediction engine estimates noise levels within the implicit space and systematically eliminates them, iteratively refining images to desired levels of clarity.

Sensitivity to transformation prompts further enhances its efficacy, enabling precise control over the denoising process.

Text Transformation: Unleashing Creativity

Text prompts represent a ubiquitous avenue for image variation within Unstable Diffusion. Employing the CLIP tokenization agent, text prompts undergo meticulous analysis, with each word embedded into a vector of 768 values.

These prompts, limited to 75 tokens per iteration, fuel the text transformer, facilitating communication with the U-Net noise prediction engine. By harnessing the power of random number generation, users can unlock a myriad of creative possibilities, generating diverse images within the implicit space.

Features of Unstable Diffusion

Unlike many other models in this field, Stability Diffusion AI demands considerably less processing power, making it a standout choice for various applications.

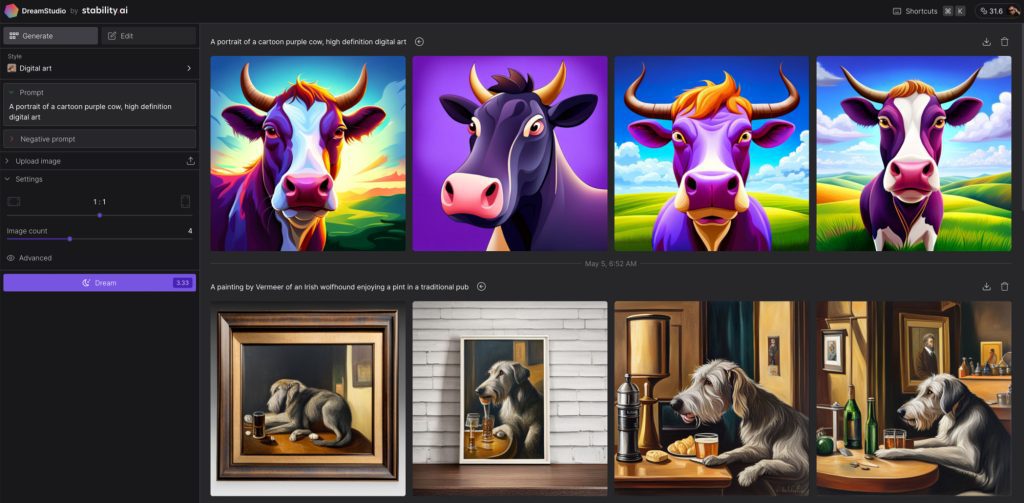

Converting Text to Image

The primary function of Stability Diffusion AI lies in its ability to seamlessly translate text into vivid images. Users can harness this feature by inputting textual prompts, generating diverse images by tweaking parameters such as seed numbers for the random generator, or adjusting denoising schedules to achieve desired effects.

Transforming Images into Images

With Stability Diffusion AI, users can take existing images as inputs and generate new ones based on specific prompts or textual cues. This functionality proves invaluable for tasks like transforming sketches into fully realized visuals, offering endless possibilities for creative expression.

Crafting Graphics, Artwork, and Logos

The model’s versatility extends to the creation of graphics, artwork, and logos across various styles. Through a series of prompts, users can craft visually striking designs, although the output may not be entirely predictable, adding an element of spontaneity to the creative process.

Editing and Enhancing Images

Unstable Diffusion empowers users to edit and refine photographs with remarkable precision. Leveraging the AI Editor, individuals can manipulate images by using tools like the eraser brush to remove unwanted elements or by setting specific goals for modifications, such as restoring old photos, altering features, or seamlessly integrating new elements into existing images.

Animating Images and Creating Videos

Beyond static images, Stability Diffusion AI enables the creation of dynamic content, including short videos and animations. By utilizing features like GitHub’s Deforum, users can infuse various styles into their videos or animate still images to simulate movement, unlocking new avenues for visual storytelling.

How to Use Unstable Diffusion AI: A Step-by-Step Guide

Whether you’re an artist seeking inspiration or a designer in need of visual elements, Unstable Diffusion provides a user-friendly interface to facilitate the creation process. Here’s a comprehensive step-by-step guide on how to leverage the capabilities of Unstable Diffusion AI:

Step 1: Accessing the Platform

Commence your journey by visiting Unstable Diffusion’s website. Upon arrival, you’ll encounter a wealth of information regarding the platform’s operations. Notably, there’s an enticing option for users to explore the FREE version, which serves as an excellent entry point for newcomers.

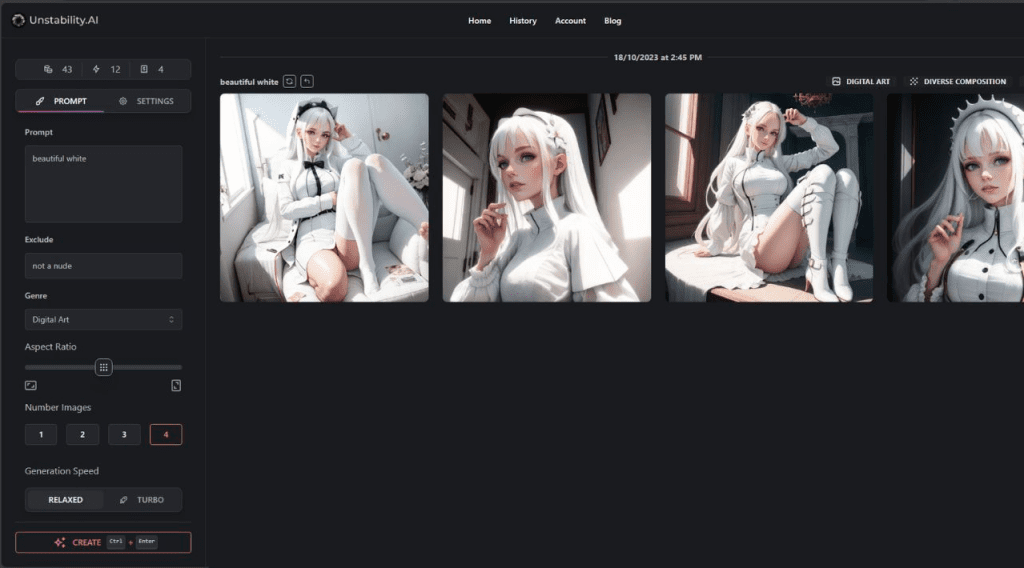

Step 2: Navigating to Image

Generation Upon opting for the free version, the website will redirect you to a designated section where image generation takes center stage. Here, you’ll encounter a text box prompting you to insert your desired command or text. Adjacent to it lies another blank box where the generated image will manifest.

Step 3: Account Management

Prior to diving into the creative process, it’s imperative to either log in if you’re an existing user or create a new account if you’re new to the platform. Account registration is crucial for unlocking the full spectrum of features and functionalities offered by Stability Diffusion AI.

Step 4: Crafting the Image Prompt

Once logged in, commence the image generation process by specifying your desired image prompt. This involves entering instructions or a prompt that encapsulates the essence of the image you envision. For instance, you might input “a serene landscape with a colorful sunset” to guide the AI’s creative endeavor.

Step 5: Refinement with Exclusion Prompts

To further refine the image generation process, consider incorporating exclusion prompts. These prompts delineate specific conditions or elements that should be omitted from the generated image. For instance, if you wish to exclude any references to water bodies, simply input “water” as an exclusion prompt.

Step 6: Genre Selection Unstable

Diffusion offers a diverse array of image genres to cater to varying preferences and creative visions. Users can select from genres such as “realistic,” “cartoony,” or “abstract,” influencing the style and characteristics of the generated image.

Step 7: Customization Options

Enhance your creative control by adjusting the aspect ratio of the generated image using a convenient slider tool. Additionally, you have the flexibility to specify the number of images you wish to generate, allowing for exploration of different variations and possibilities.

Step 8: Initiating Image Generation

With all parameters meticulously set to align with your preferences, it’s time to breathe life into your vision. Simply click on the “Create” button to kickstart the image generation process.

Upon activation, Unstable Diffusion harnesses the power of its neural network model and diffusion modeling technique. These sophisticated algorithms work in tandem to interpret your prompts and specifications, weaving them into a coherent visual narrative.

Why is Unstable Diffusion Important?

What sets Stability Diffusion AI apart is its ability to run on consumer-grade graphics cards, making it widely accessible to a broad user base. One of the key highlights of Unstable Diffusion is its democratizing effect on image creation. For the first time, users can freely download models and generate images without the need for extensive technical knowledge or specialized equipment.

Moreover, Stability Diffusion AI offers users significant control over key hyperparameters, including the number of denoising steps and the level of noise applied. This level of customization empowers users to tailor their creative process according to their preferences and artistic vision.

Optimized Image Generation in Unstable Diffusion

Craft Detailed and Specific Prompts

The key to unlocking the full potential of Unstable Diffusion AI lies in the specificity and detail of your prompts. Instead of generic requests, provide detailed instructions and descriptions to guide the AI towards your desired outcome. Experiment with different combinations and variations to unleash unique and unexpected results that align with your creative vision.

Explore Diverse Genres and Aspect Ratios

Don’t limit yourself to a single style or aspect ratio. Embrace experimentation by exploring a wide range of image genres and aspect ratios within Unstable Diffusion AI. Whether you’re interested in surreal landscapes, abstract art, or portrait photography, try out different styles and compositions to discover what resonates best with your creative sensibilities.

Embrace the Unpredictability

One of the most exciting aspects of Unstable Diffusion AI is its unpredictable nature. Instead of viewing this unpredictability as a limitation, embrace it as an opportunity for exploration and discovery. Be open to trying out different prompts, settings, and techniques to uncover new and exciting possibilities that you may not have considered before.

Exercise Caution with Content Concerns

While Unstable Diffusion AI is a powerful tool for creative expression, it’s essential to exercise caution and mindfulness when generating content. Be aware of the potential for generating harmful or offensive material and take steps to mitigate these risks. Consider the impact of your prompts and settings on the final output, and strive to create content that is respectful and appropriate.

Pros And Cons Of Unstable Diffusion

Pros of Unstable Diffusion

High interactivity

Unstable Diffusion introduces an AI-driven chatbot that redefines the boundaries of user interaction. Through advanced algorithms, the platform facilitates human-like conversations, creating an immersive experience tailored for users seeking explicit interactions. This innovative feature transcends traditional chatbots, offering a level of engagement that blurs the lines between human and artificial intelligence interaction.

Good security

In an era where data privacy is paramount, Stability Diffusion AI sets itself apart by prioritizing user privacy and security. Employing robust measures such as encryption and stringent privacy protocols, the platform ensures the safety of users’ sensitive data. With a commitment to safeguarding privacy, users can engage with confidence, knowing that their personal information remains protected within the restrictions of the platform.

Cons of Unstable Diffusion

There are still limitations in the free version

While Unstable Diffusion boasts an array of enticing features, its subscription-based model poses a barrier for users seeking free access. While certain basic functionalities are available at no cost, premium services require a subscription, potentially limiting access for individuals unwilling or unable to pay. This model, although common in the industry, may hinder the platform’s accessibility to a wider audience.

Not fully personalized

Despite its advancements in AI technology, Stability Diffusion AI faces criticism regarding its ability to replicate the nuanced intricacies of human interaction. While the AI-driven chatbot excels in delivering realistic conversations, there are instances where it falls short in providing a personalized touch. This limitation could impact the depth of user experiences, leaving some individuals craving the authenticity and empathy inherent in human interactions.

Use Cases of Unstable Diffusion

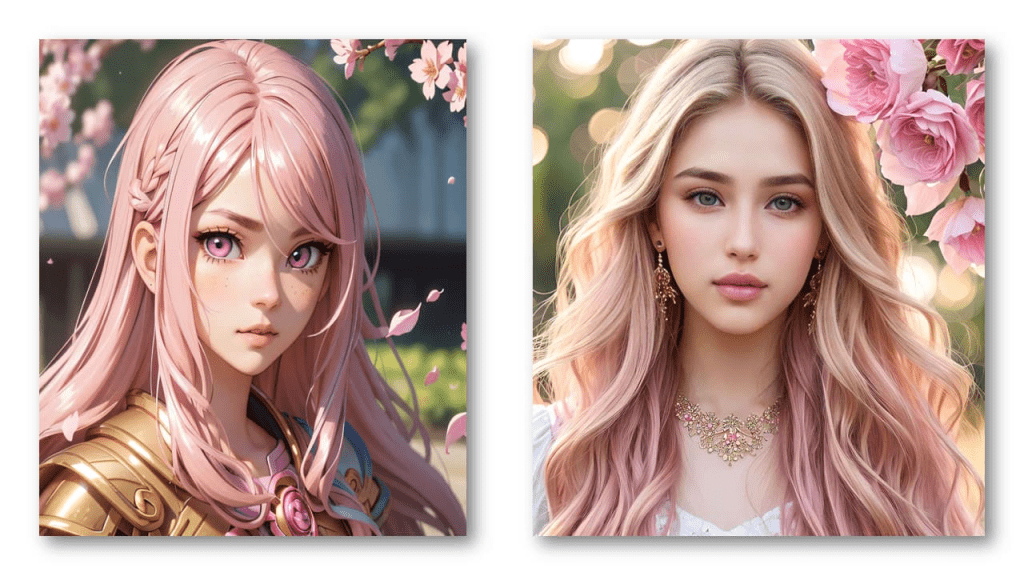

Artistic Exploration

One of its primary applications lies in artistic exploration. Artists are leveraging Unstable Diffusion to break the confines of traditional art forms, delving into the realm of digital art to pioneer new creative frontiers.

Content Creation

For bloggers, content creators, and marketers striving to carve a distinct identity in the digital sphere, Unstable Diffusion offers a valuable resource. Its capacity to generate unique visual content injects freshness and originality into their work, helping them stand out amidst the sea of online content.

Educational Endeavors

In the realm of education, Unstable Diffusion serves as an invaluable teaching aid. Educators are harnessing its potential to elucidate the intricacies of AI and image generation in a manner that captivates and engages students. By integrating this technology into their curriculum, educators are fostering an interactive learning environment that stimulates curiosity and facilitates deeper understanding.

Unstable Diffusion Review: Pricing, Licensing, and Accessibility

Introduction of Tiered Subscription Model:

Unstable Diffusion, a rising star in the digital realm, has introduced a groundbreaking tiered subscription model that revolutionizes user access to its platform.

Free Access To Basic Features Tier:

At the forefront of this model is the Free Access To Basic Features tier, which offers users complimentary access to foundational functionalities.

Tiered Subscription Plans:

The platform offers a range of subscription plans tailored to meet diverse user needs and preferences, including:

- Basic Tier: Providing limited access to features at no charge.

- Standard Tier: Unlocking additional functionalities and content for $9.99 per month.

- Premium Tier: Offering complete access to all premium features and exclusive content for $19.99 per month.

- Custom Tier: Tailored pricing based on personalized service requirements.

Premium Services And Value Proposition:

Each tier is designed to offer escalating levels of value, with the Premium Tier delivering an array of exclusive services and content to justify its price point.

What are the Unstable Diffusion alternatives?

RunDiffusion

RunDiffusion introduces a swift and accessible method for users to delve into AI-generated art creation. With pre-loaded models and a cloud-based infrastructure, users can initiate their artistic journey in just 90 seconds. This platform leverages powerful GPUs in the cloud, providing users with a fully controlled environment. Offering rental options by the hour, RunDiffusion presents a convenient avenue for artists to explore their creativity.

MidJourney

MidJourney stands out as an autonomous research facility dedicated to expanding the creative horizons of humanity. Similar to established models like DALL-E and Stable Diffusion, MidJourney employs generative AI to craft images from natural language prompts. Accessible through a Discord bot, MidJourney empowers users to unleash their imagination with a simple command. Moreover, the platform is actively developing a web interface, promising further accessibility and functionality in the near future.

DALL-E

Developed by OpenAI, DALL-E remains a pioneering force in the realm of AI-driven visual synthesis. Utilizing transformer networks and generative models, DALL-E interprets textual descriptions to create visually accurate representations. Its innovative approach continues to inspire creativity and exploration in the AI art community.

CLIP (Contrastive Language-Image Pre-Training)

OpenAI’s CLIP represents a breakthrough in AI comprehension, seamlessly integrating text and images. Renowned for its versatility, CLIP has found applications in text-to-image generation, object detection, and image categorization. Its adaptability makes it a valuable asset across various domains of AI-driven visual synthesis.

Craiyon

Craiyon emerges as a versatile AI model capable of transforming language queries into stunning graphics. Formerly known as DALL-E Mini, Craiyon offers both a mobile app and an online demo for users to experience its capabilities firsthand. With its latest iteration, Craiyon V35, users can expect enhanced performance and refined results. The platform invites users to explore the possibilities of AI-driven art creation at no cost through its accessible online interface.

Conclusion

At the heart of Unstable Diffusion AI lies its remarkable ability to transform ordinary noise into intricate works of art. Through a delicate interplay of algorithms and neural networks, the tool breathes life into digital canvases, turning simple inputs into mesmerizing visual creations.

The sheer complexity and beauty of the resulting artwork stand as a testament to the power of AI-driven innovation. Hopefully, Coincu‘s Unstable Diffusion Review article has helped you understand more about this tool.

| DISCLAIMER: The information on this website is provided as general market commentary and does not constitute investment advice. We encourage you to do your own research before investing. |