Key Points:

- Vitalik’s tech future outlook shows the importance of considering the direction of technological advancements and the need for active human intention in choosing the desired outcomes.

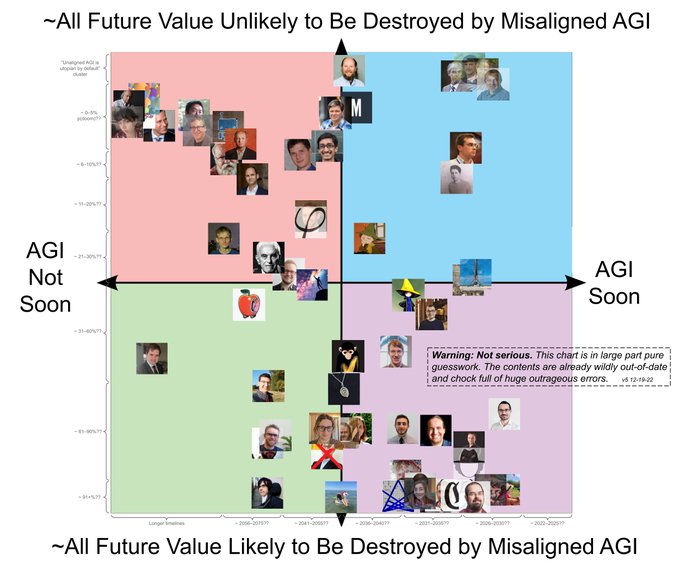

- Buterin highlights the risks associated with superintelligent AI, questioning the desirability of a future dominated by it, and emphasizes the need for careful consideration of rapid technological advances.

Vitalik’s tech future outlook promotes embracing technology, rejecting stagnation, and prioritizing advancements. He discusses the risks of superintelligent AI and emphasizes the need for careful consideration of technological progress.

Vitalik Buterin’s “Techno-Optimist Manifesto” emphasizes the importance of embracing technology and markets for a brighter future. It rejects the idea of stagnation and highlights the need to prioritize advancements rather than preserving the status quo.

The manifesto has sparked significant attention and received responses from various experts, with opinions ranging from positive to negative. The OpenAI dispute further fueled the debate, focusing on the risks associated with superintelligent AI and concerns about the pace of development.

Buterin’s own perspective on techno-optimism is warm but nuanced. He believes in a future where transformative technology leads to significant improvements, and he trusts in the potential of humanity.

However, he emphasizes the importance of the direction of technological advancements. Certain technologies have a more positive impact than others, and some can mitigate the negative consequences of other technologies.

Vitalik’s Tech Future Outlook: AI is fundamentally different from other tech

Buterin argues that active human intention is necessary to choose the desired directions, as relying solely on profit maximization won’t automatically lead to the desired outcomes.

The manifesto also touches on environmental concerns and the need for coordinated efforts to address them. Buterin acknowledges the risks associated with superintelligent AI, including the potential for human extinction.

He raises questions about whether a future dominated by superintelligent AI is desirable, referencing Iain Banks’s Culture series as an example of a positive depiction. Buterin highlights the importance of carefully considering the implications of rapid technological advances, as they are likely to be the most significant social issue of the 21st century.

DISCLAIMER: The Information on this website is provided as general market commentary and does not constitute investment advice. We encourage you to do your own research before investing.